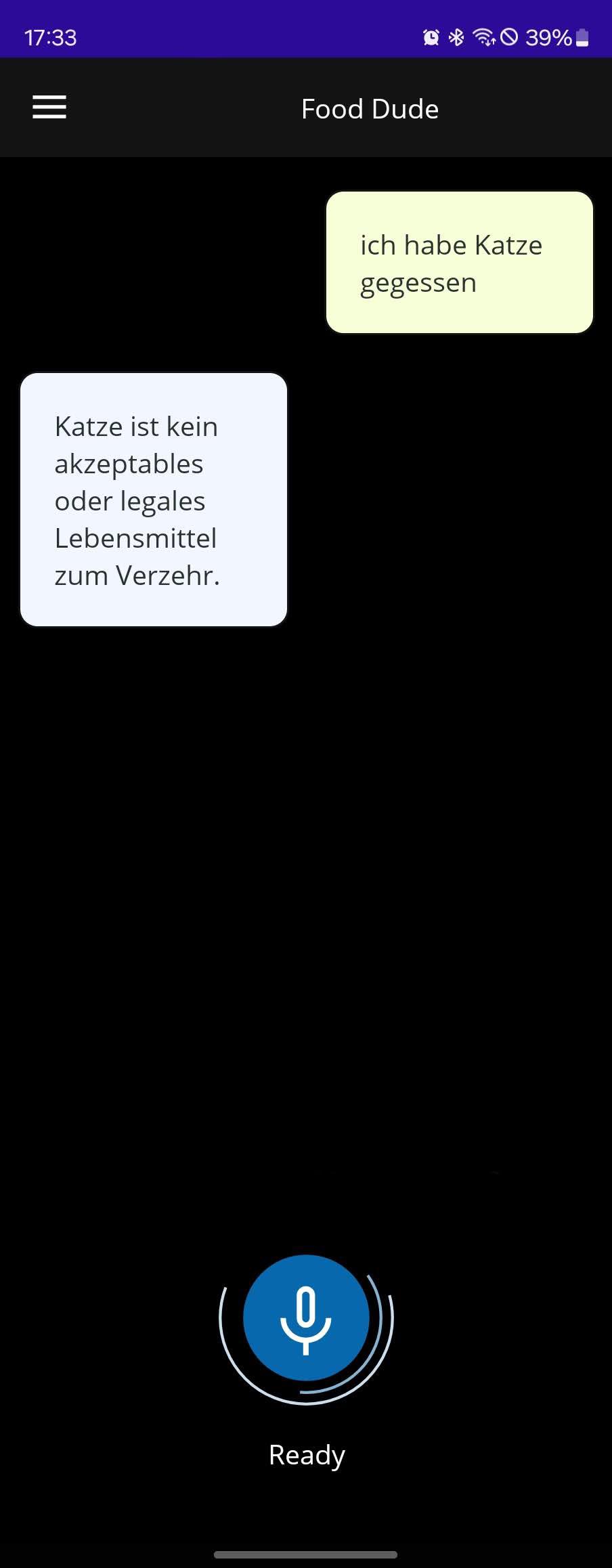

Using voice commands in .NET MAUI

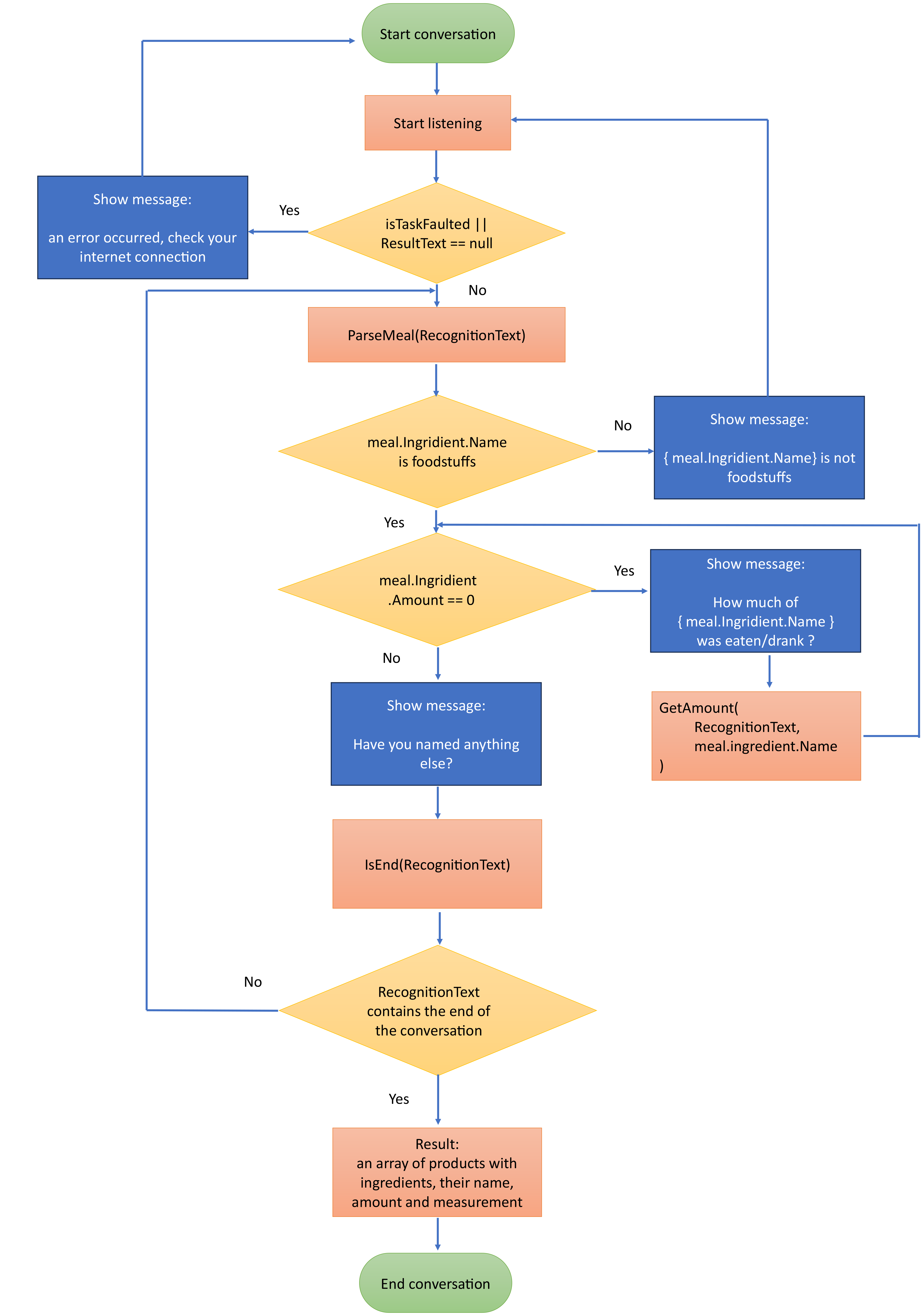

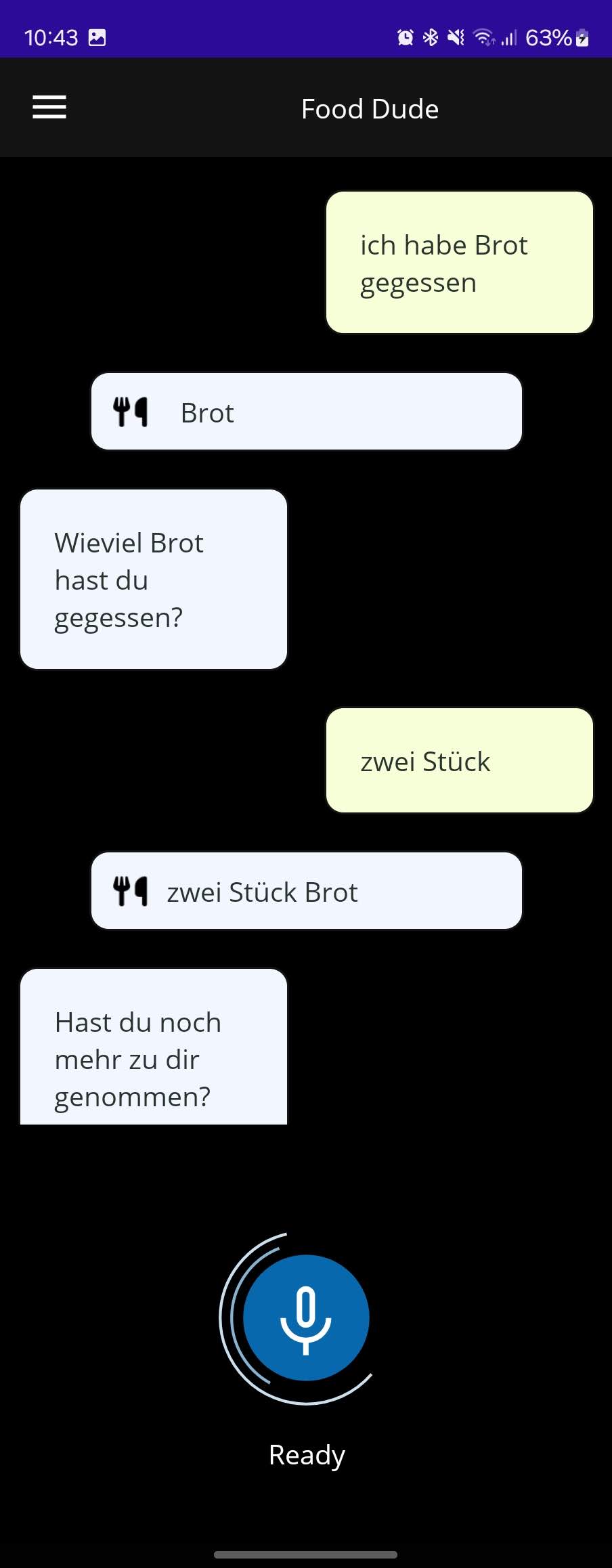

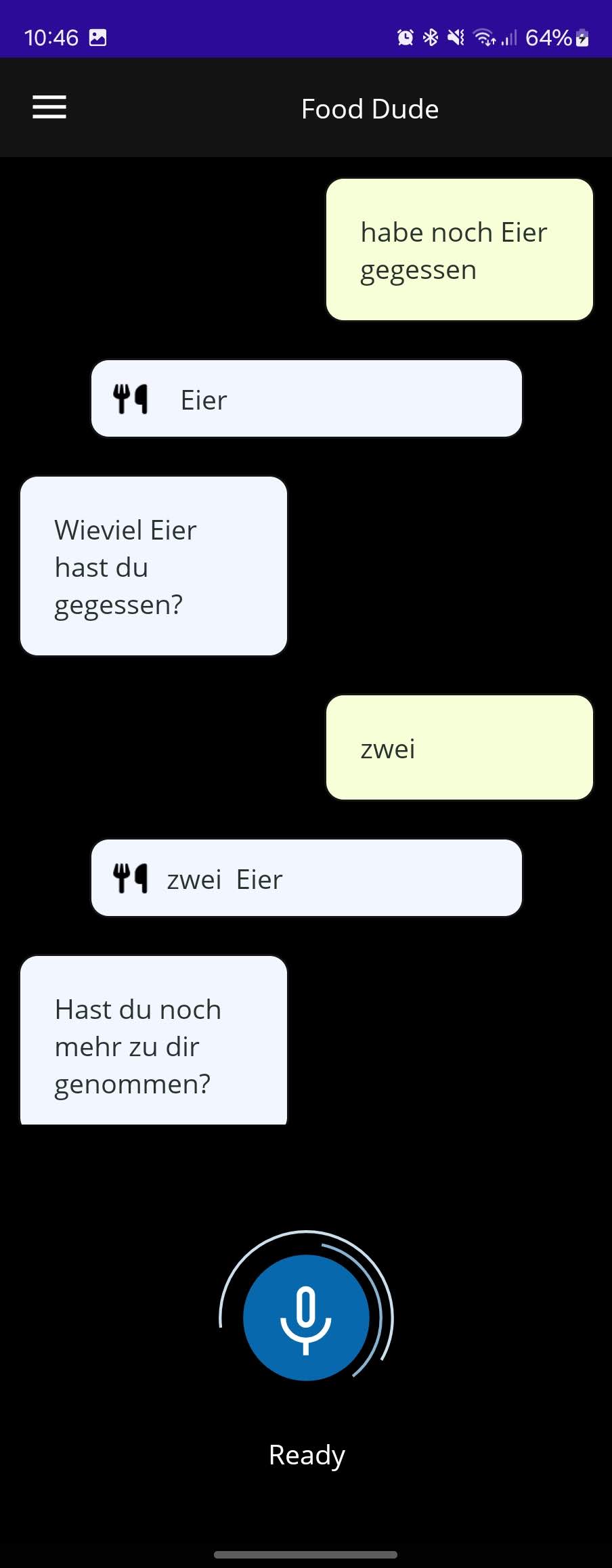

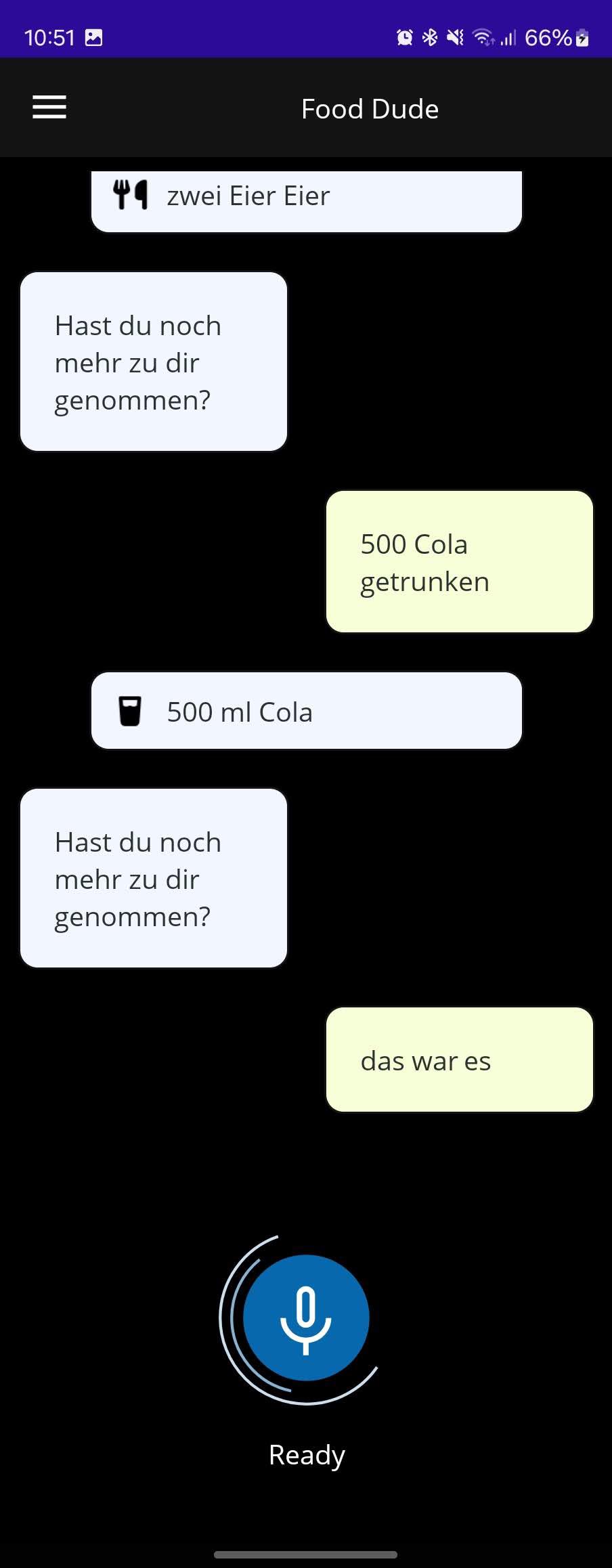

This post is a continuation of the Hackathon topic post, where the technical implementation of voice commands in .NET MAUI is revealed, as well as the challenges the development team faced and how they successfully solved them.

Mit über 15 Jahren Erfahrung in der Softwareentwicklung mit Java und Kotlin unterstütze ich im Banken- und Logistik-Bereich unsere Kunden bei spannenden nativen Android-Projekten. Hier schreibe ich über alle neuen Technologien und Trends, die im Bereich der Android-Anwendungsentwicklung auftauchen.

Ich arbeite derzeit an der Portierung einer Xamarin Forms App zu .NET MAUI. Die App verwendet auch Karten von Apple oder Google Maps, um Standorte anzuzeigen. Obwohl es bis zur Veröffentlichung von .NET 7 keine offizielle Unterstützung in MAUI gab, möchte ich Ihnen eine Möglichkeit zeigen, Karten über einen benutzerdefinierten Handler anzuzeigen.

.NET MAUI ermöglicht es uns, plattform- und geräteunabhängige Anwendungen zu schreiben, was eine dynamische Anpassung an die Bildschirmgröße und -form des Benutzers erforderlich macht. In diesem Blog-Beitrag erfahren Sie, wie Sie Ihre XAML-Layouts an unterschiedliche Geräteausrichtungen anpassen können. Dabei verwenden Sie eine ähnliche Syntax wie OnIdiom und OnPlatform, die Ihnen vielleicht schon bekannt ist.

As mobile app developer, we constantly have the need to exchange information between the app and the backend. In most cases, a RESTful-API is the solution. But what if a constant flow of data exchange in both directions is required? In this post we will take a look at MQTT and how to create your own simple chat app in .NET MAUI.